News & Updates

- [2025/10] We have released the technical report on xRouter,

a LLM orchestration framework for effective and efficient query routing.

- [2025/10] See our UserRL work featured on social media including Twitter

and WeChat!

I am also going to give several talks on user-centric agent design and evaluation. See you online!

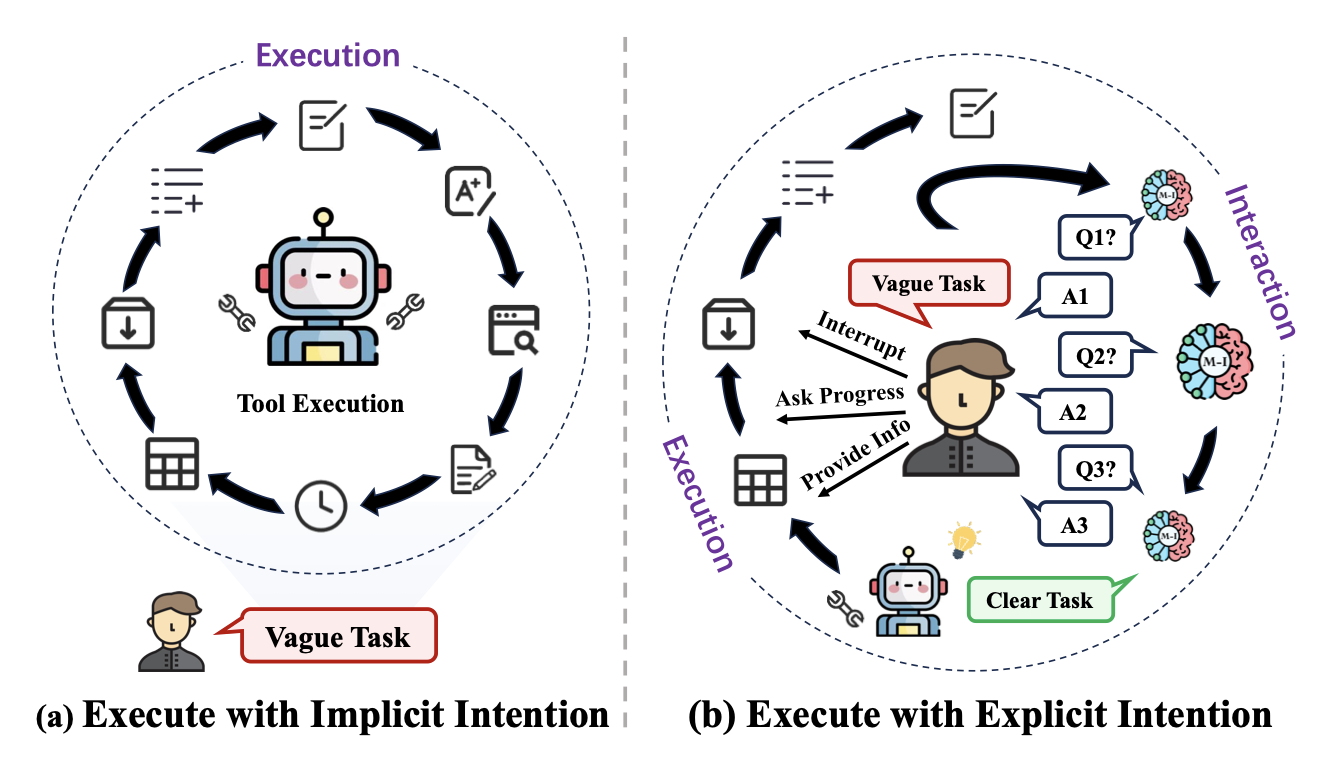

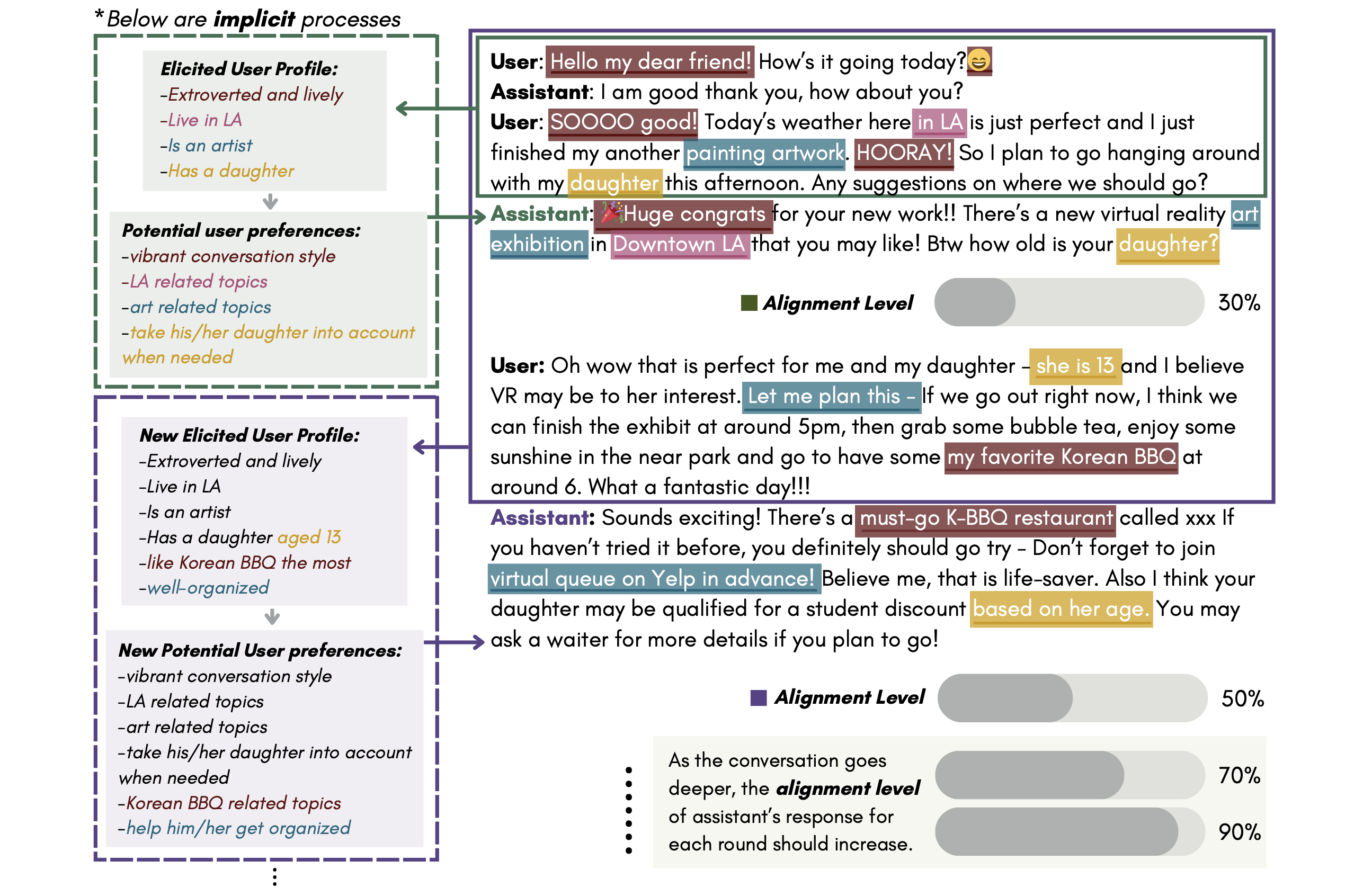

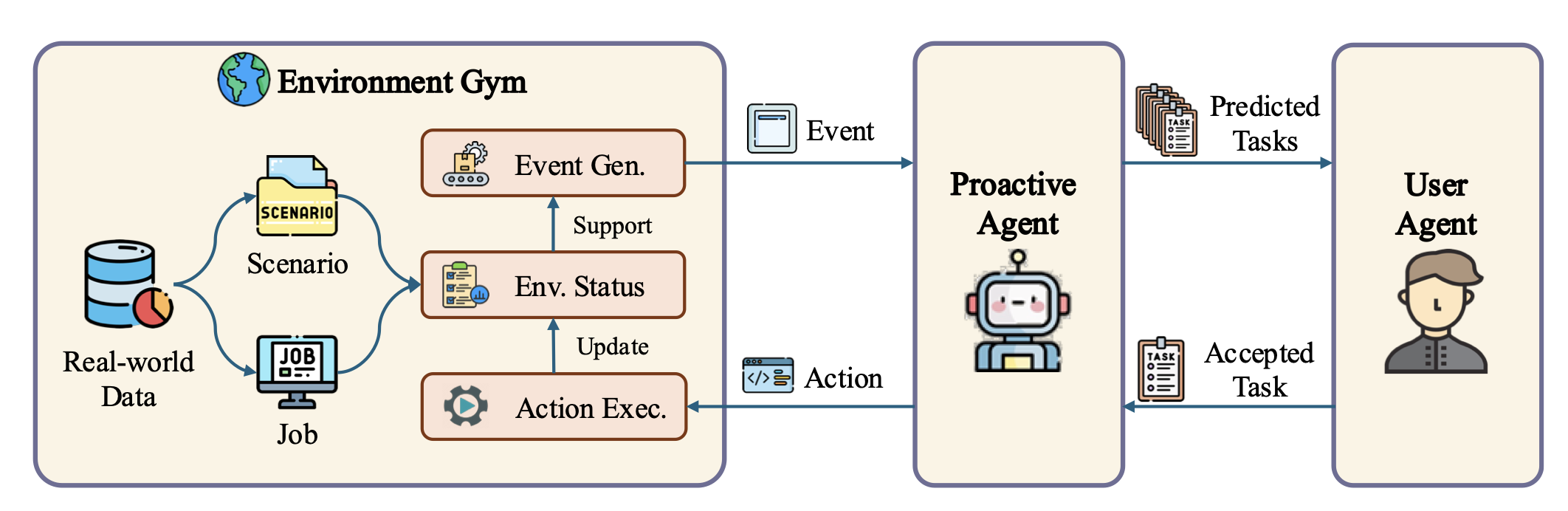

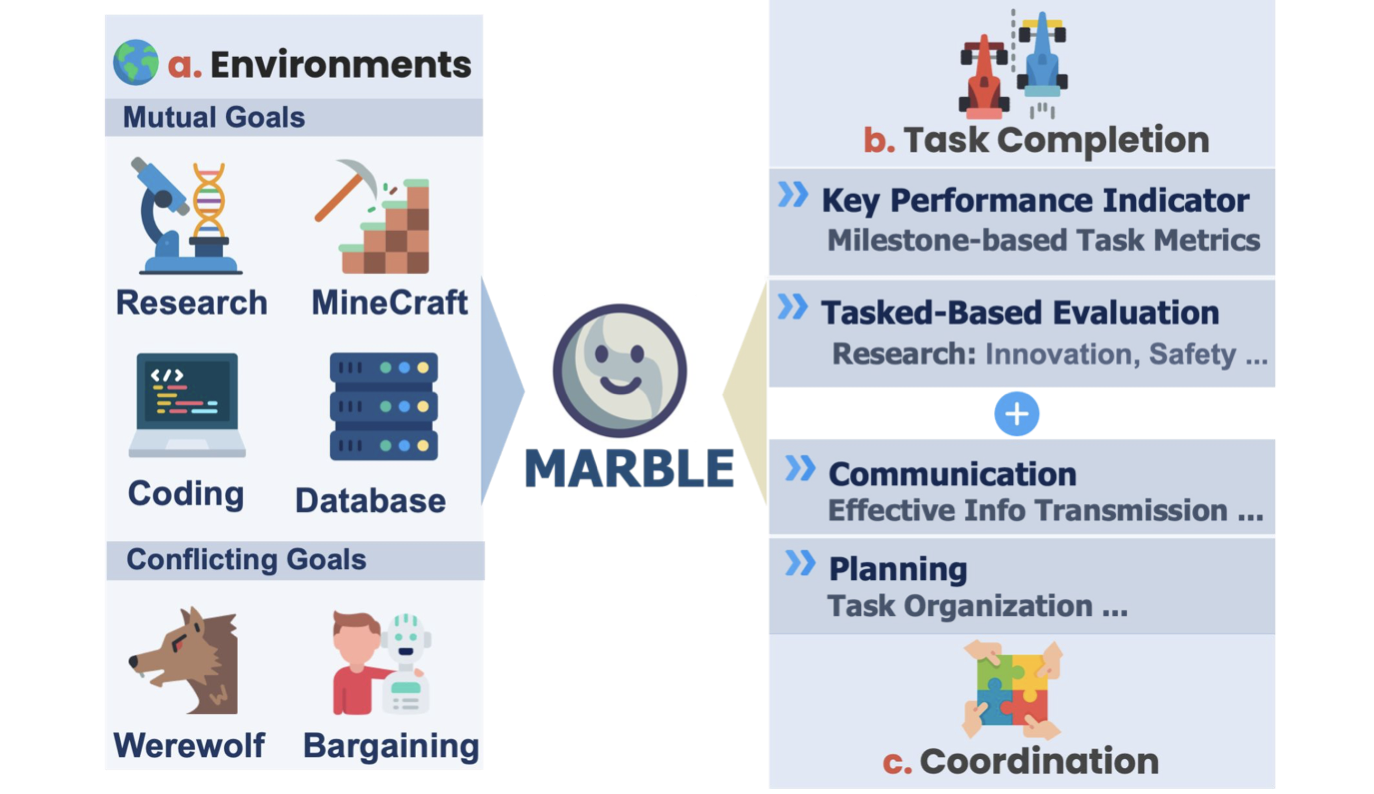

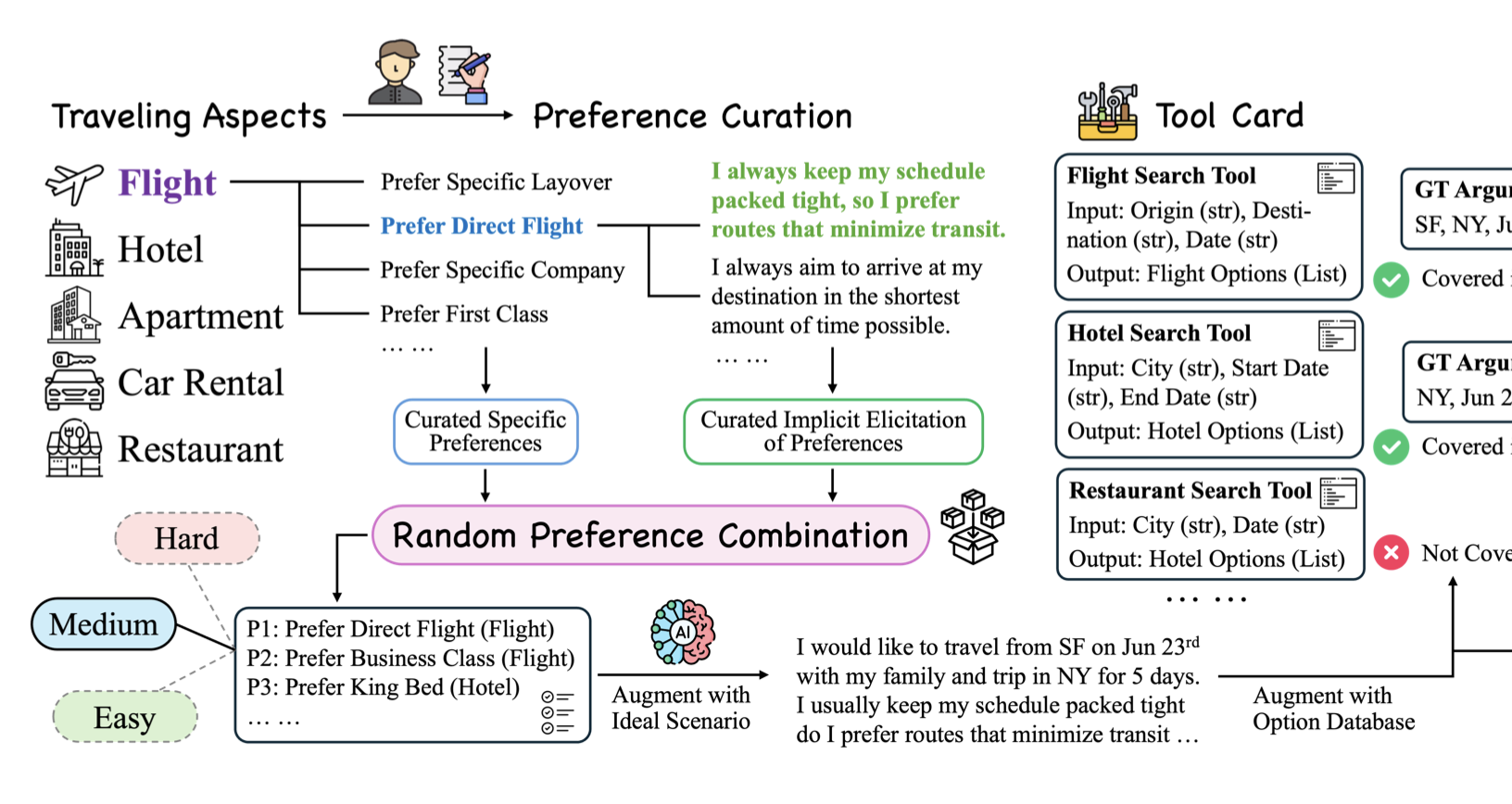

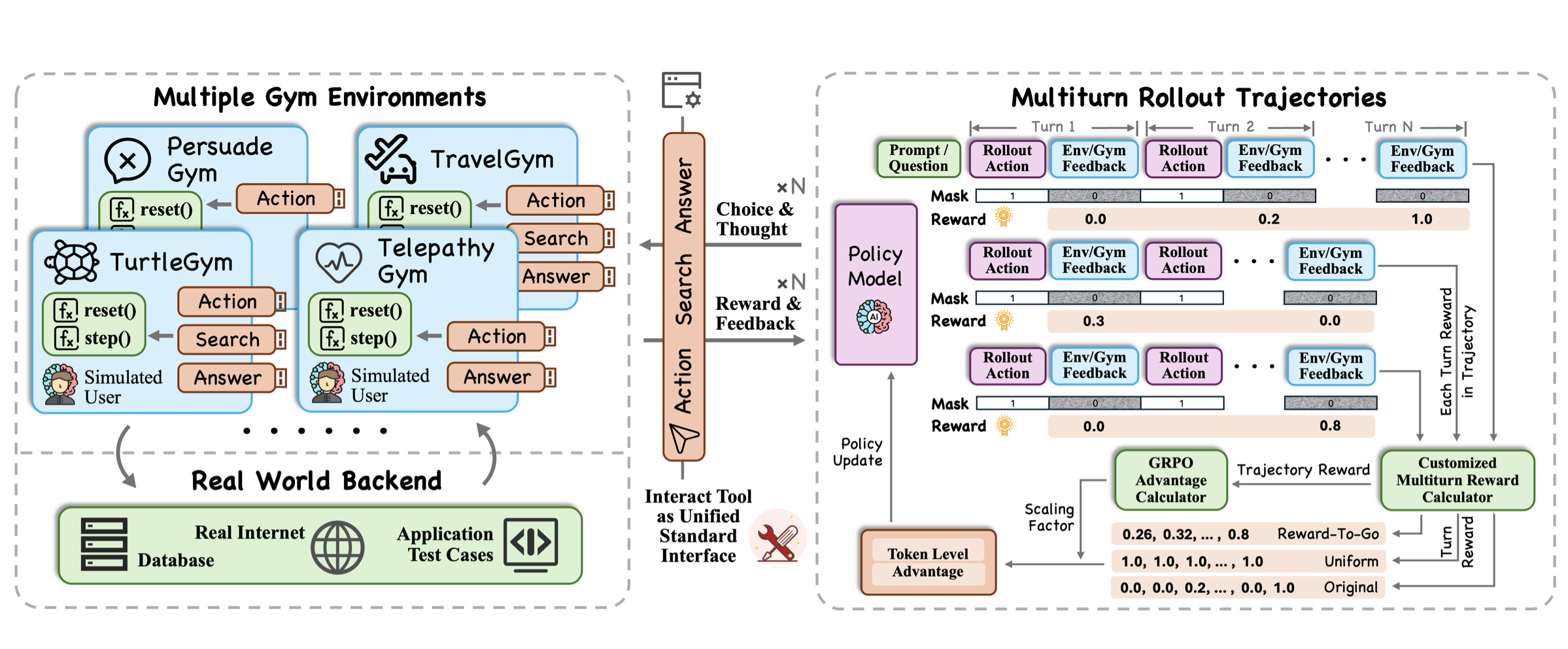

- [2025/09] My series of works at Salesforce AI Research on LLM agent for user alignment is coming!

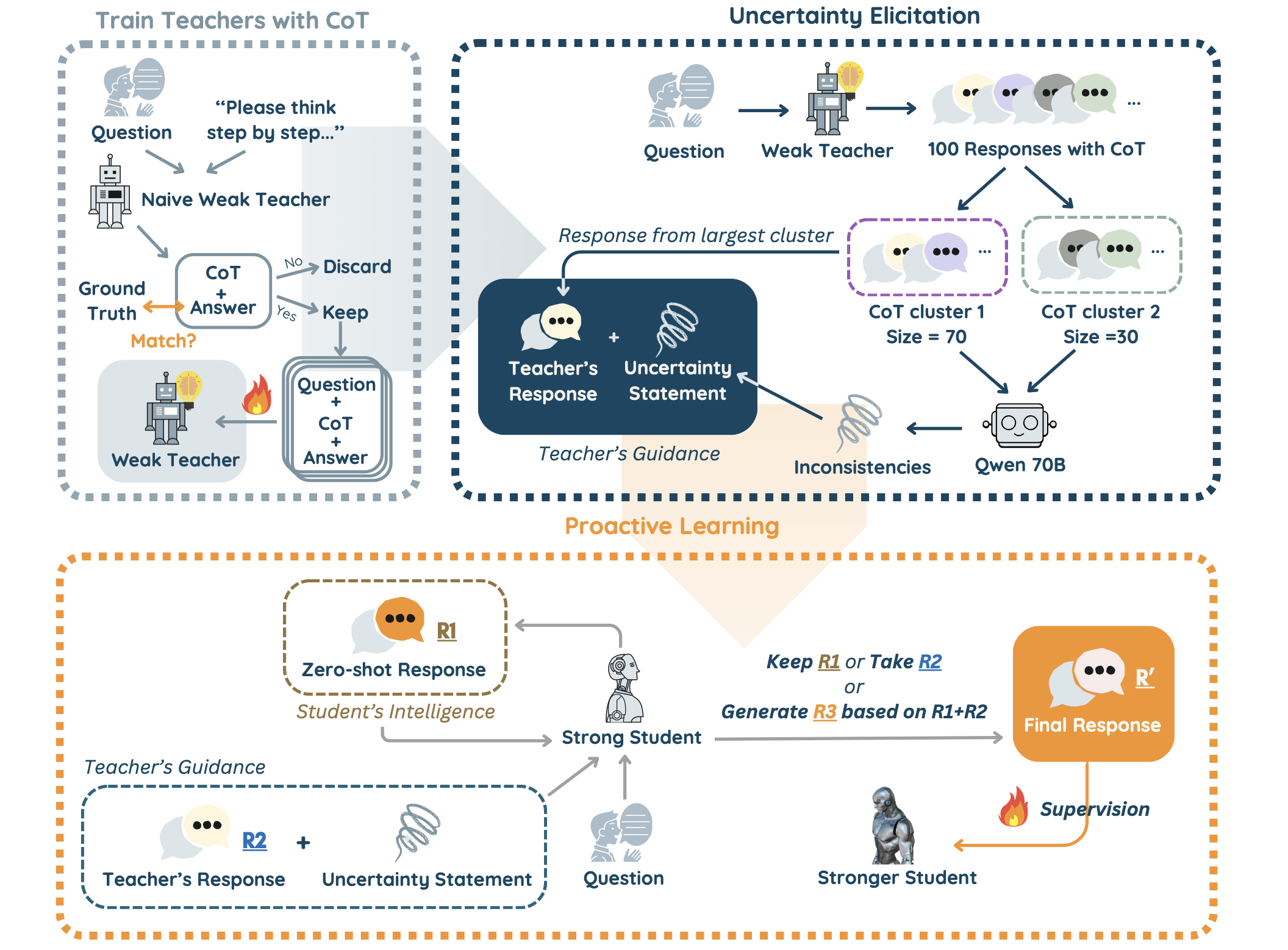

Check out the UserRL paper which builds upon and expands our previous

UserBench paper. We also released our code for all gym environments and training

here.

- [2025/09] Our ToolRL paper is accepted by NeurIPS 2025!

Thanks to all the collaborators! Hope to see you all at San Diego this December!

- [2025/08] I have five first-suthor and co-author works accepted by EMNLP 2025,

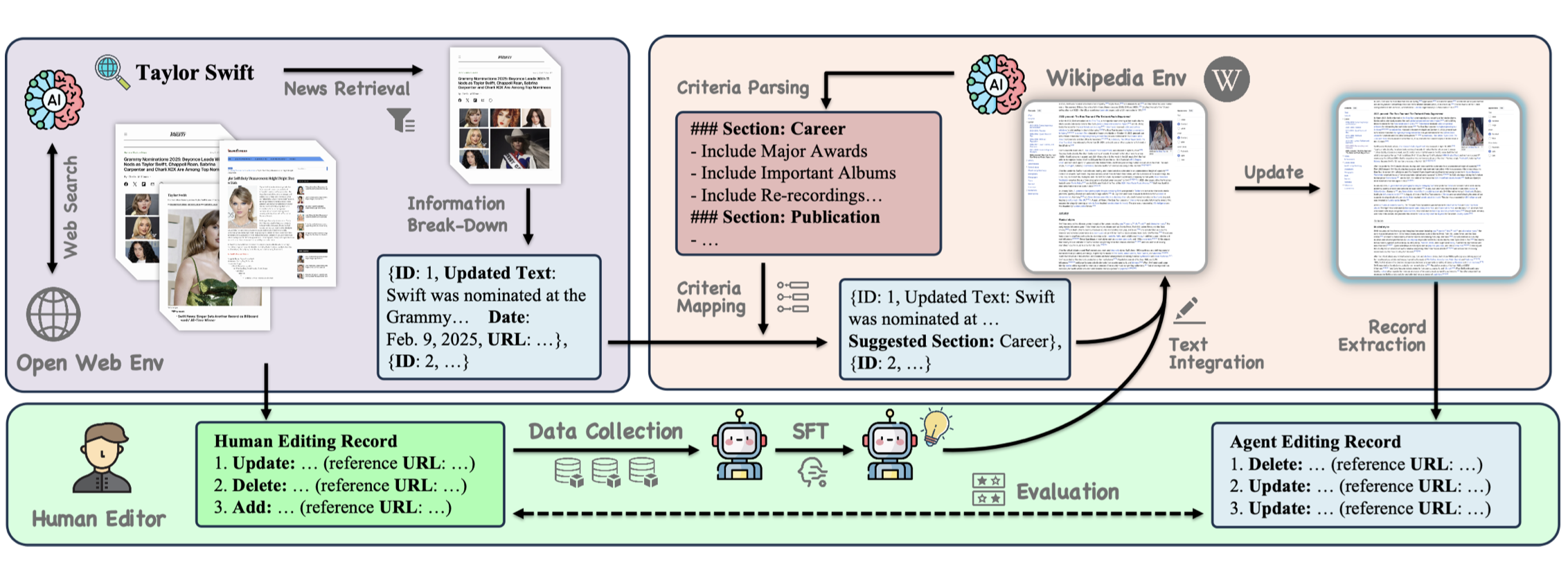

including the ModelingAgent paper. Congratulations to all the authors! Hope to see you all at Su Zhou this November!

- [2025/05] Glad to announce that I am joining Salesforce AI Research for my 2025 summer internship!

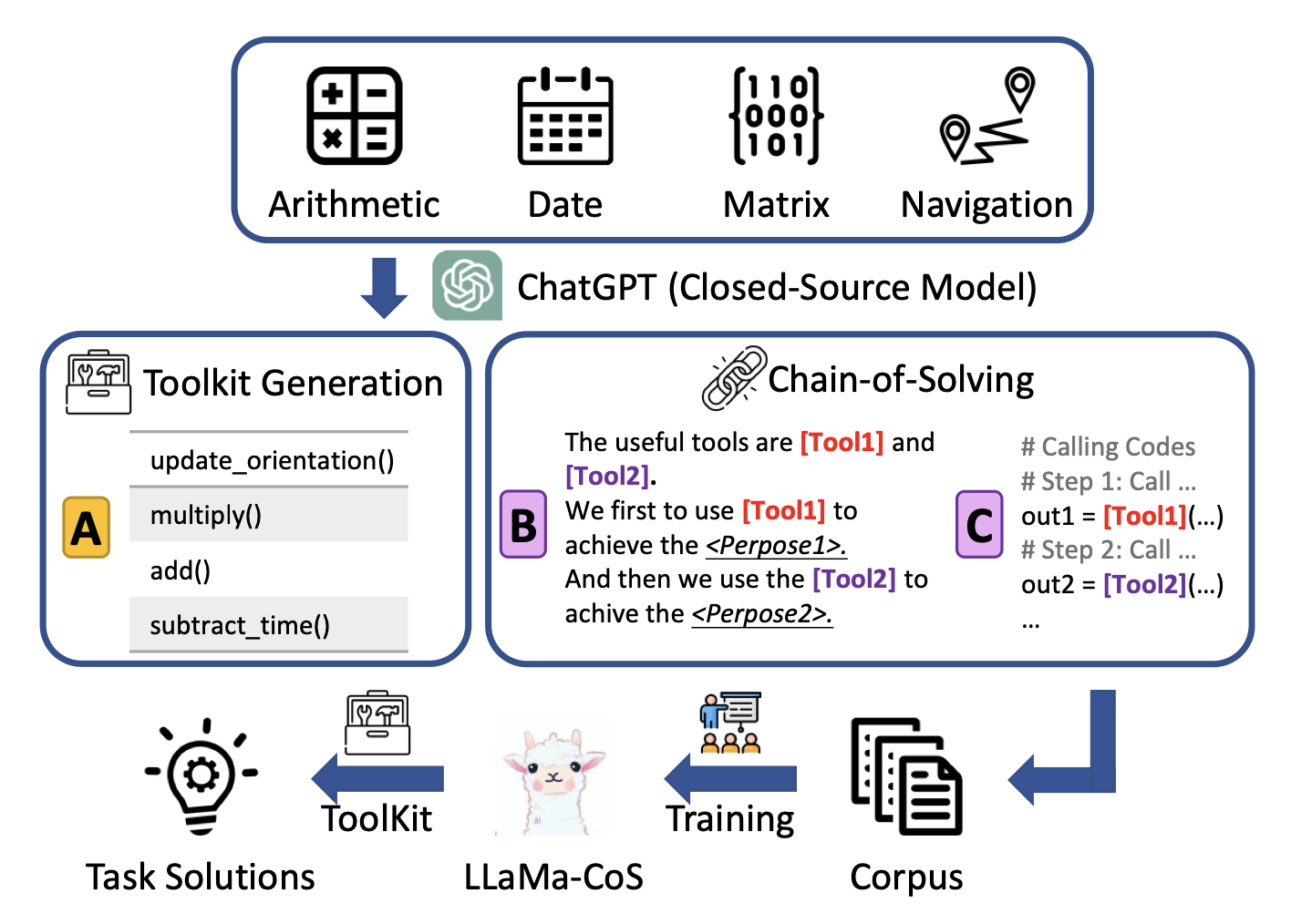

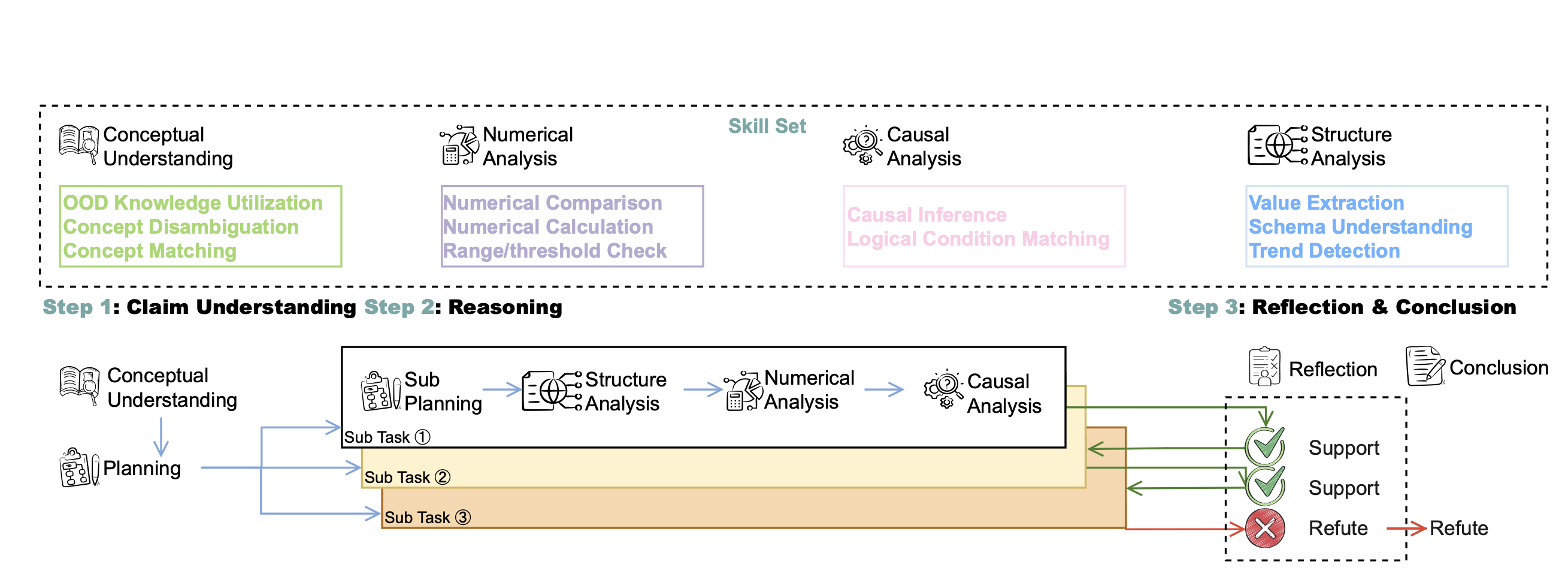

- [2025/05] My newest paper on how to design agent for math modeling is released! Check the ModelingAgent paper

to see how to ground math in real world problem solving.

- [2025/05] Glad to announce that I have six first-author and co-author works accepted by ACL 2025.

My work EscapeAgent is accepted in the main track. Congratulations to all the authors!

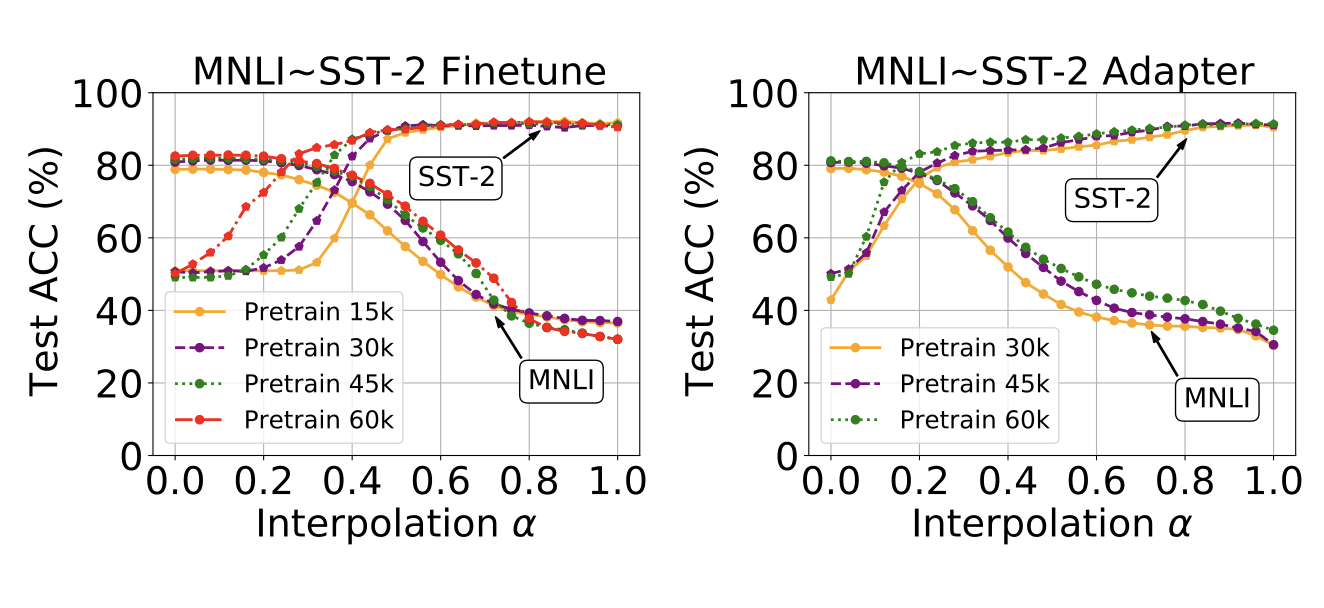

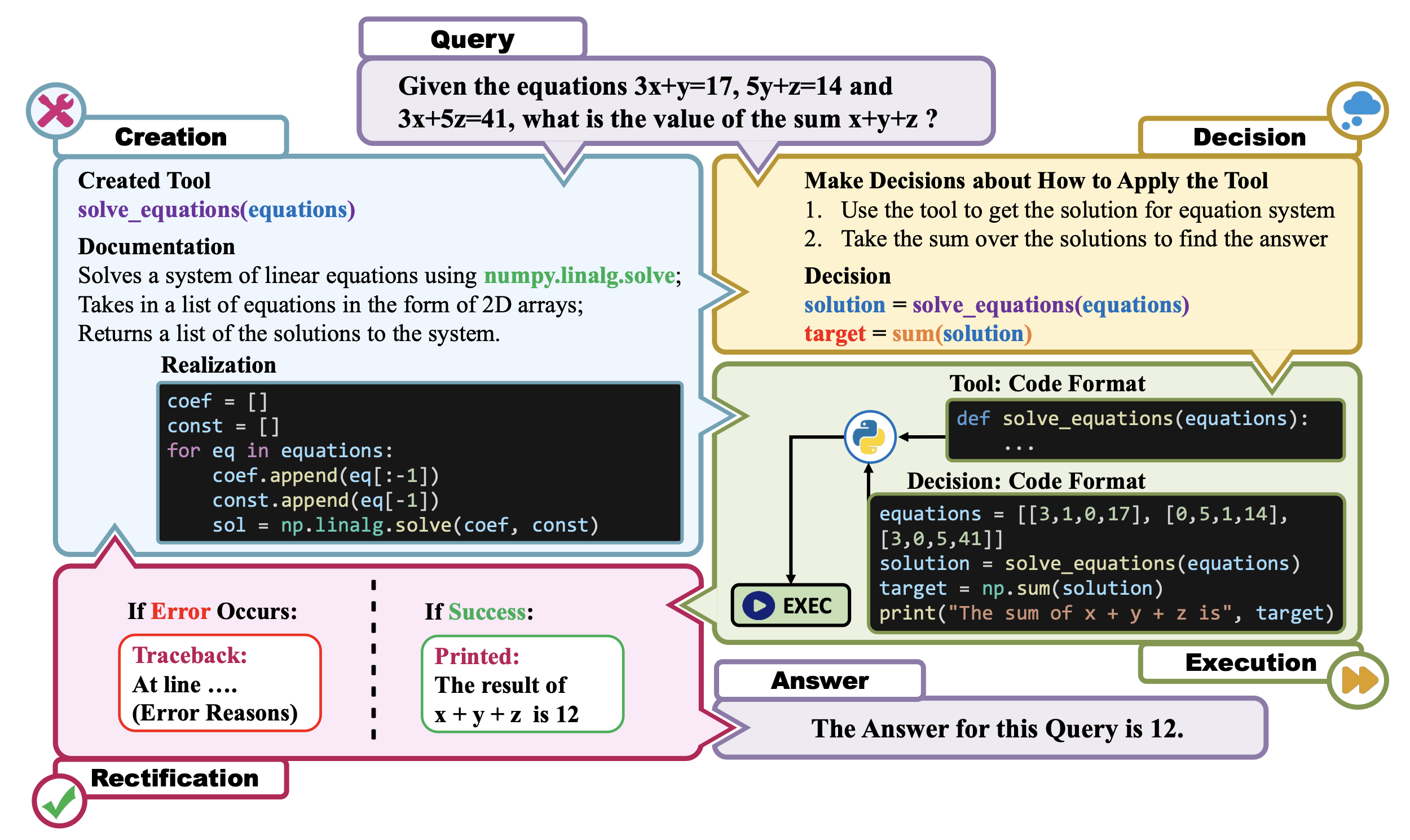

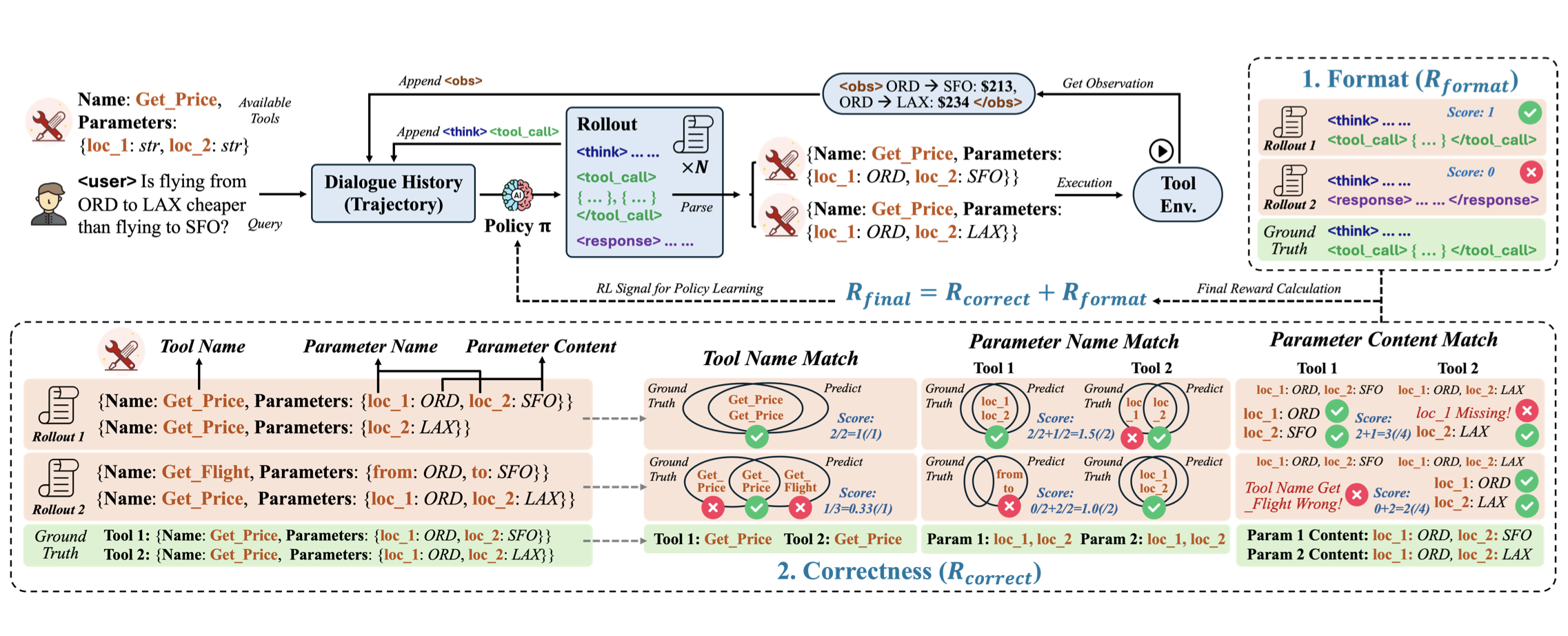

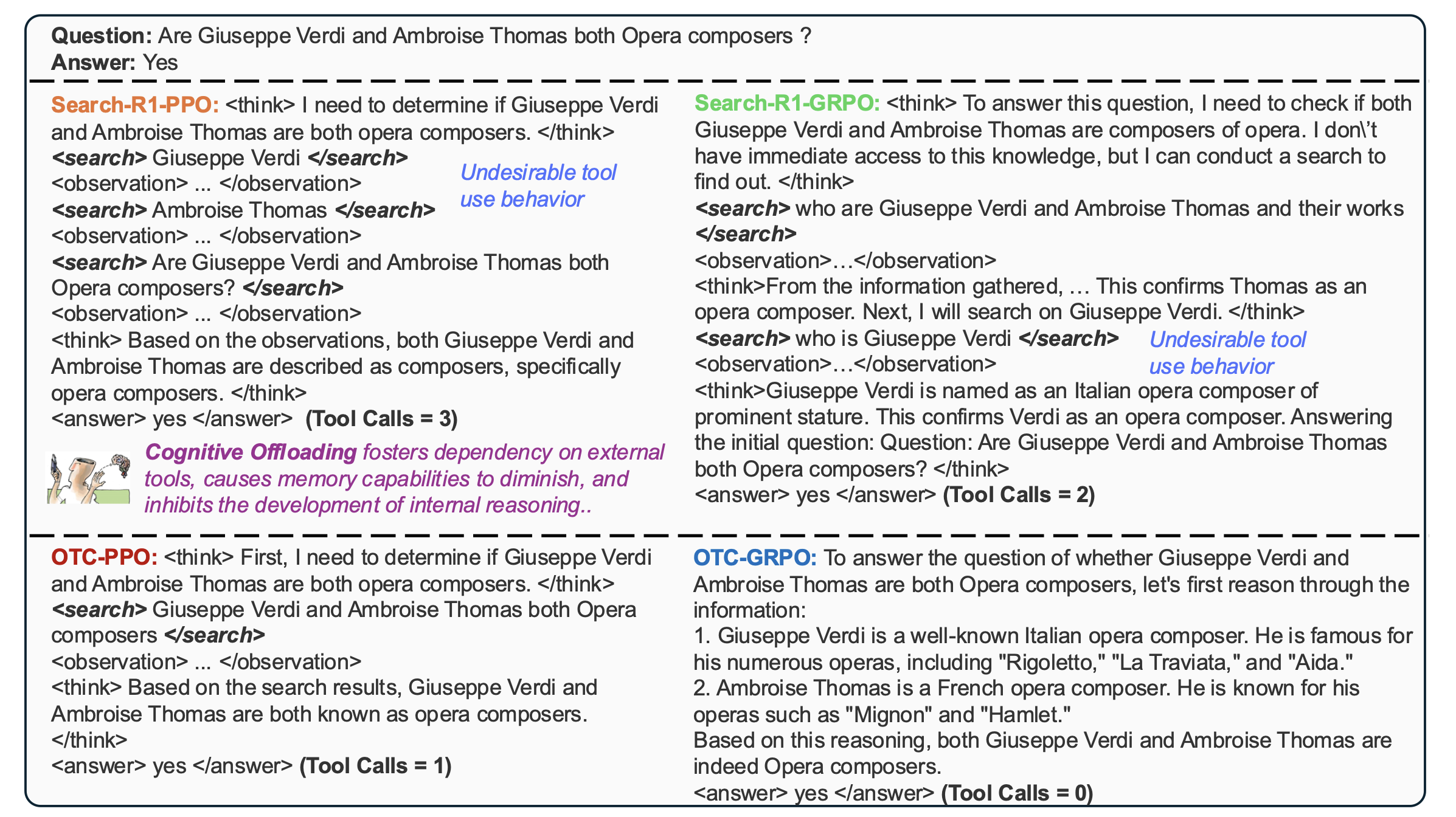

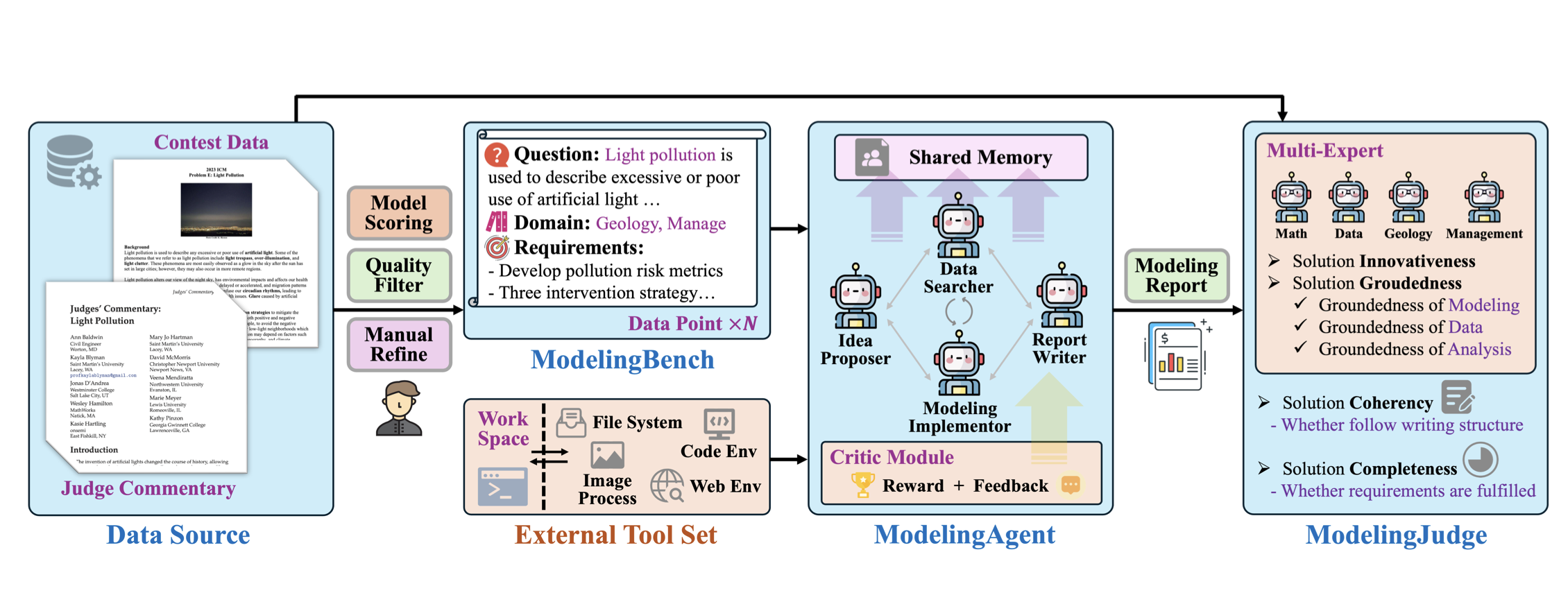

- [2025/04] We released our ToolRL paper, which focuses on the reward shaping for

LLM tool use tasks. We also released our code here. Check it out for more details!

- [2025/04] I am at NAACL 2025 this year at New Mexico! Hope to chat with you there!

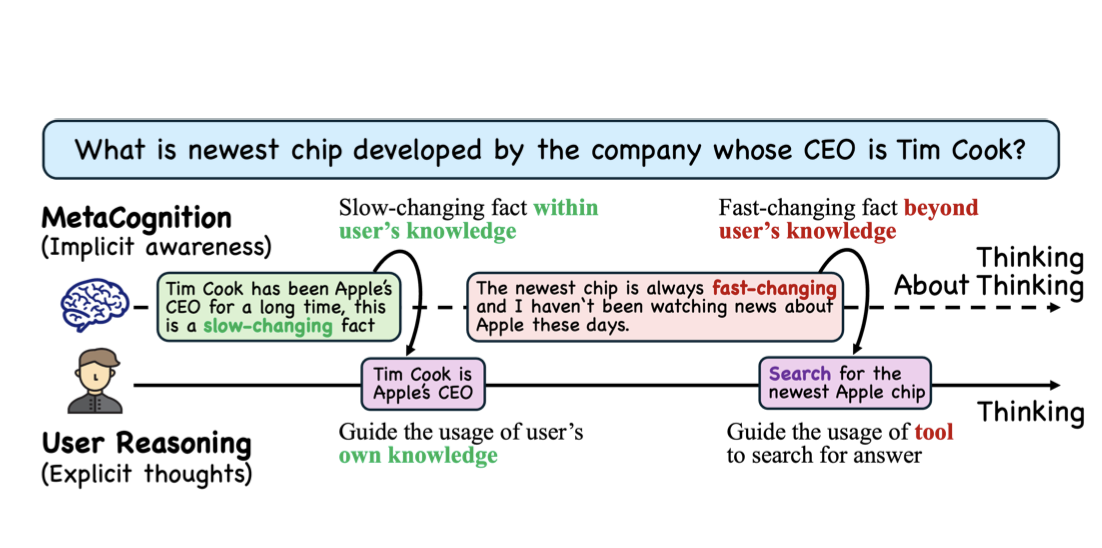

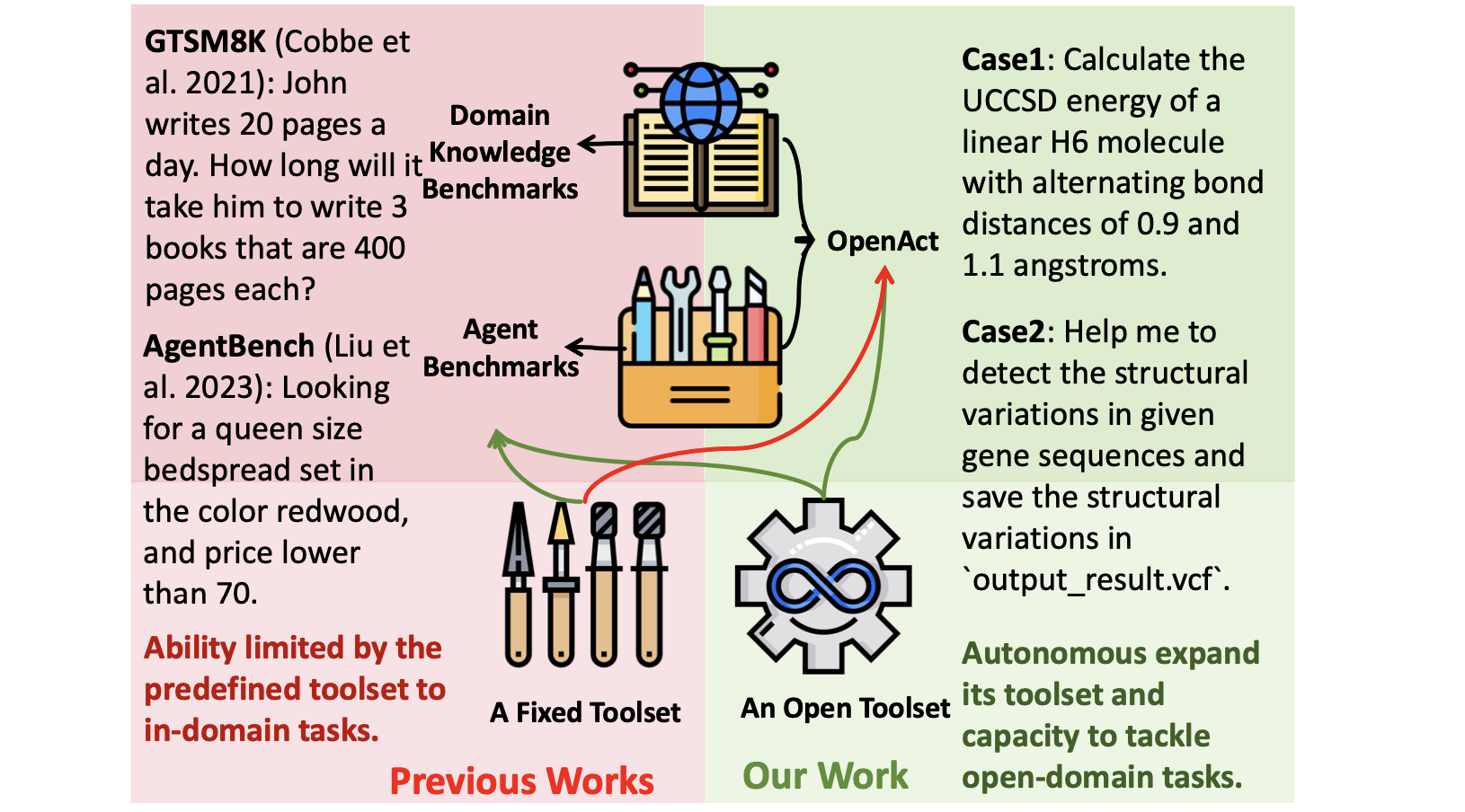

- [2025/03] We released the SMART paper which for the first time systematically evaluates the tool overuse

problem in LLM and LLM-driven agent.

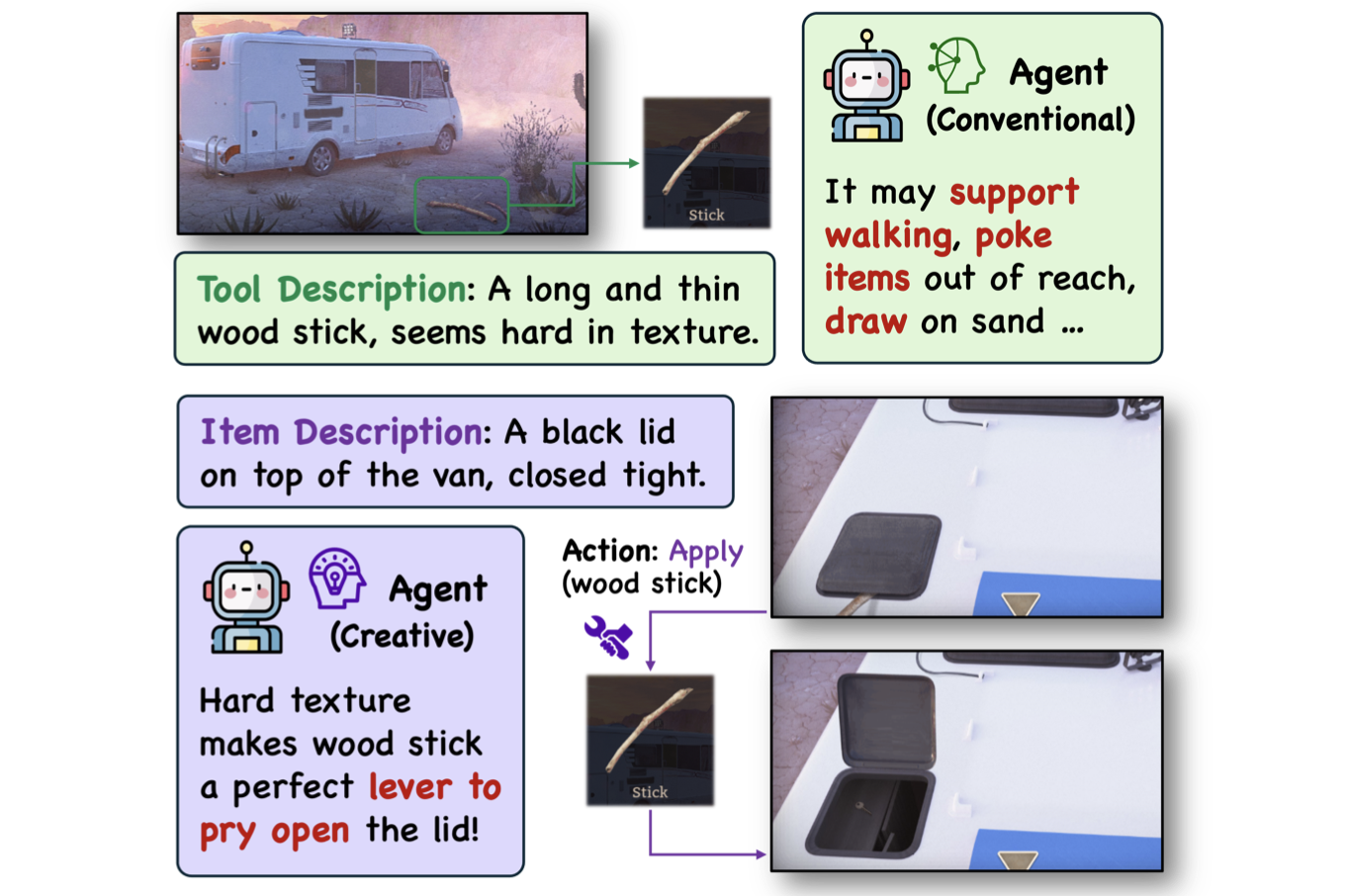

- [2024/12] My first paper in PhD is out! Check the EscapeBench paper to see if your

LLM is creative enough to escape from the sandbox environment!

- [2024/12] I am participating in EMNLP 2024 at Miami this year! Hope to see everyone!

- [2024/12] Graduated from Tsinghua, I am now starting my PhD at UIUC! Looking forward to working with you all!

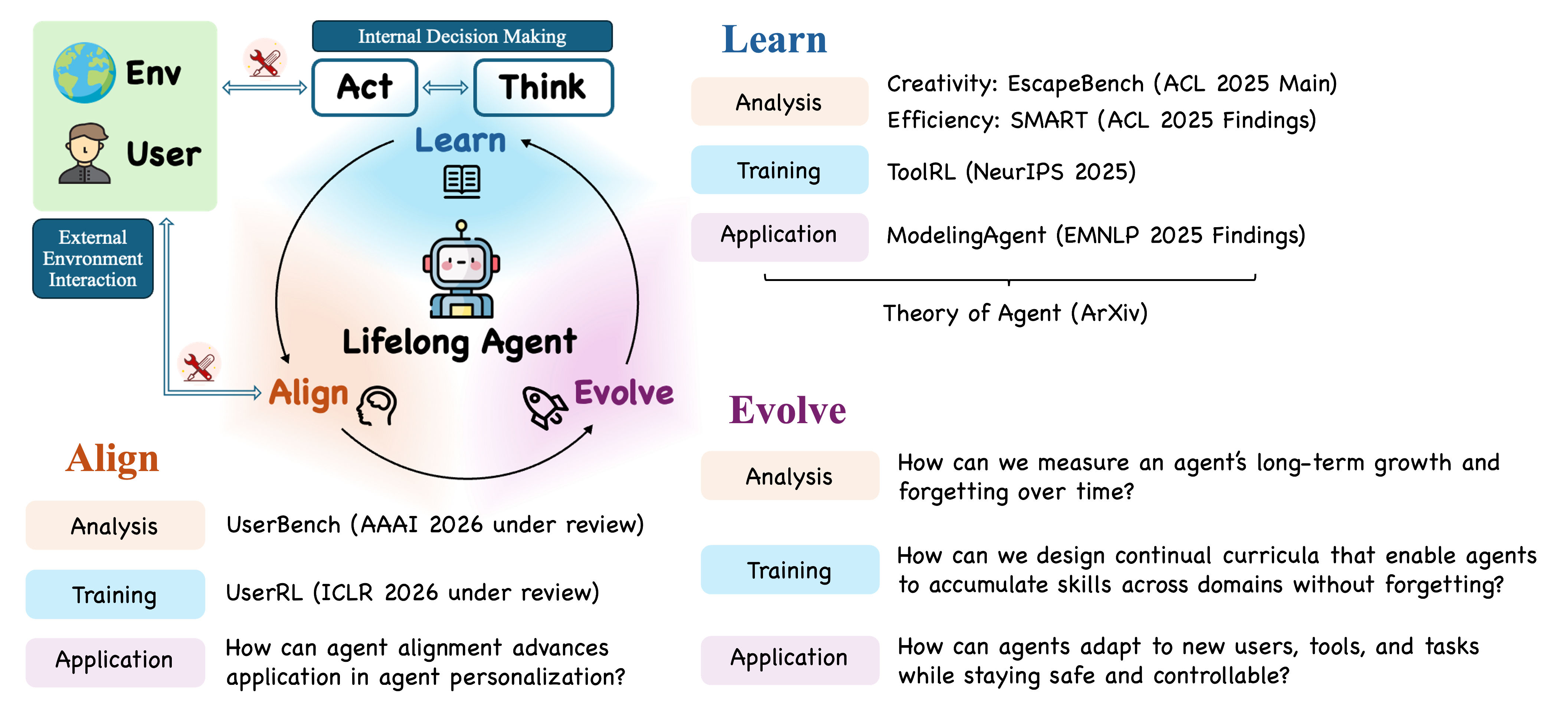

Research Focus

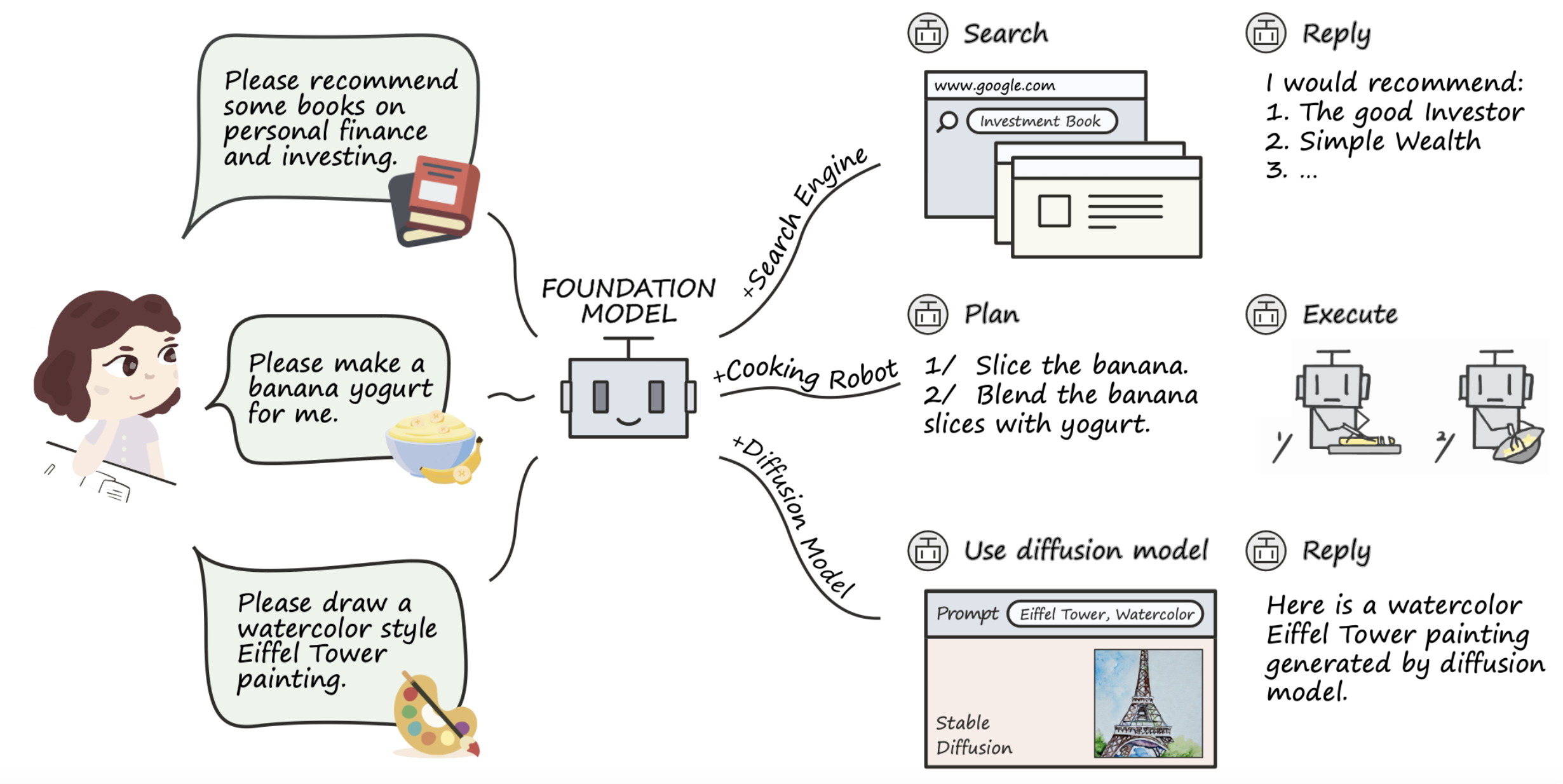

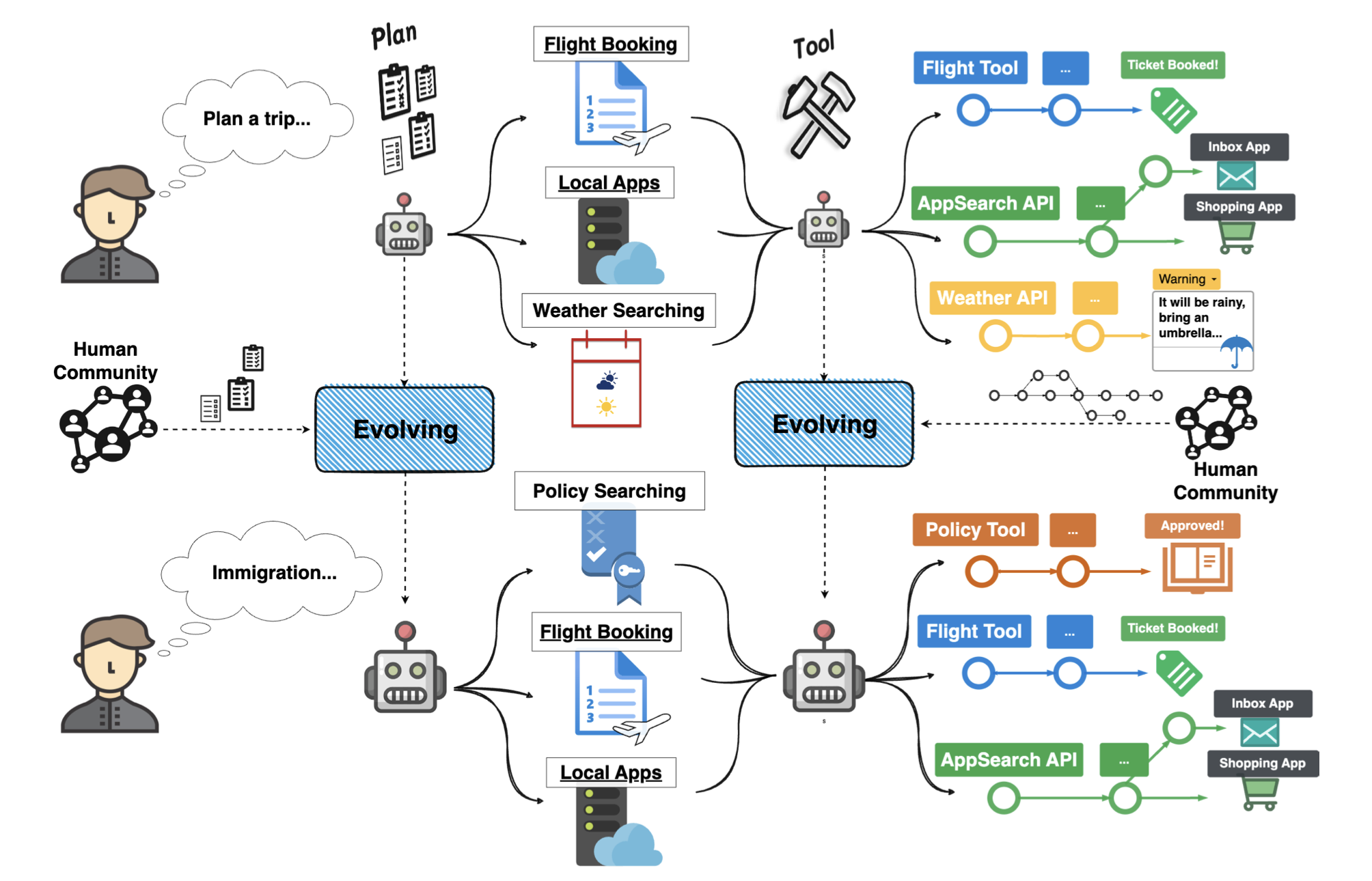

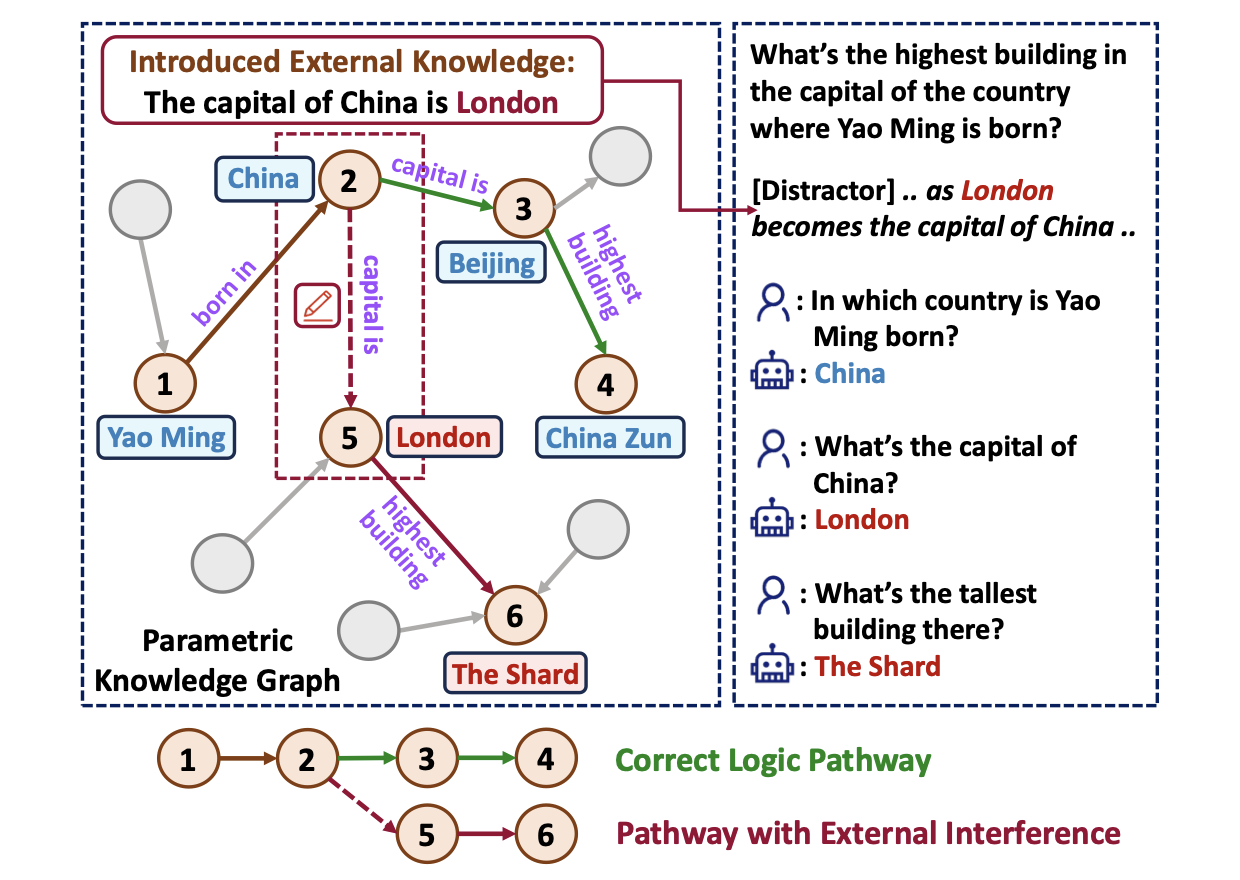

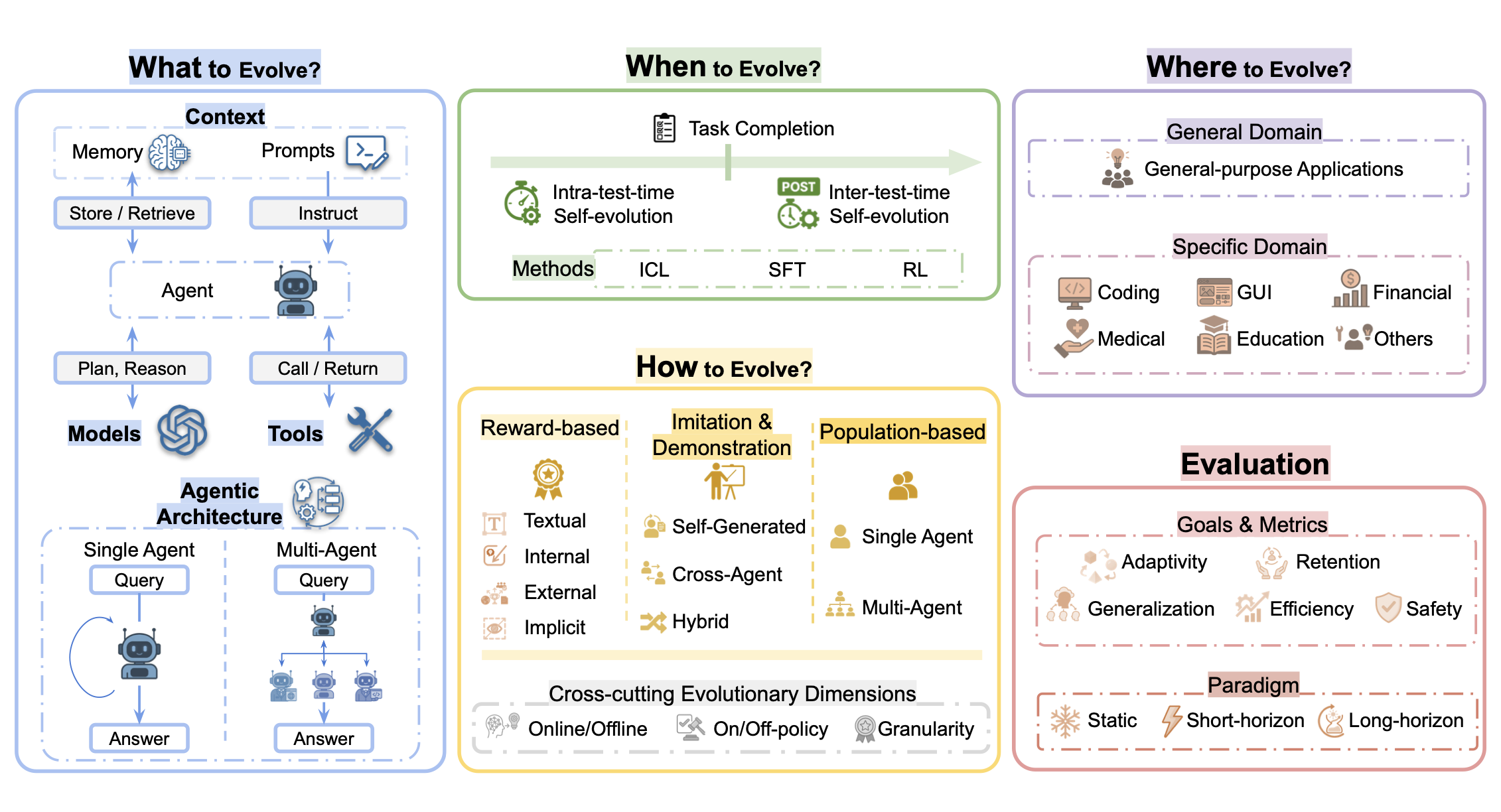

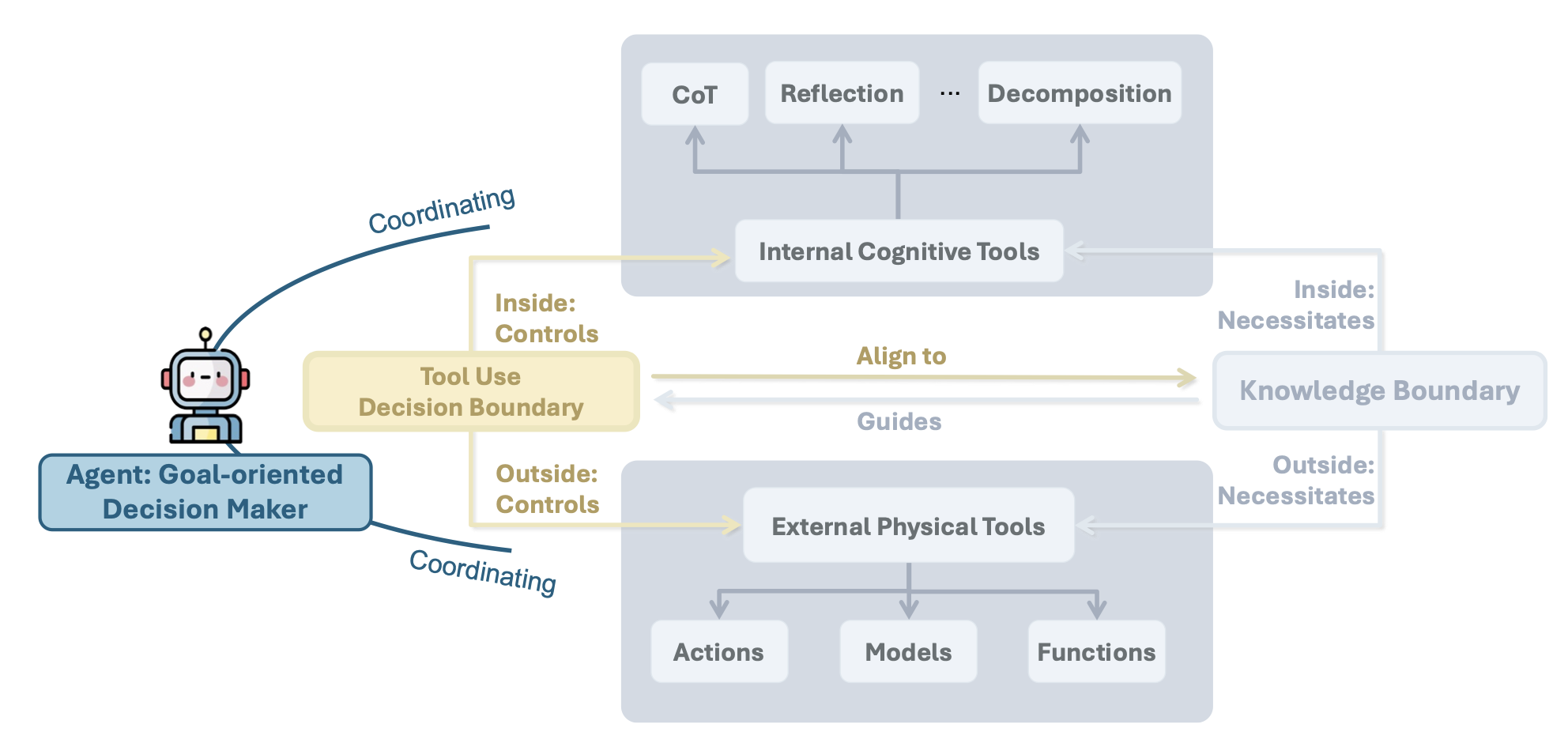

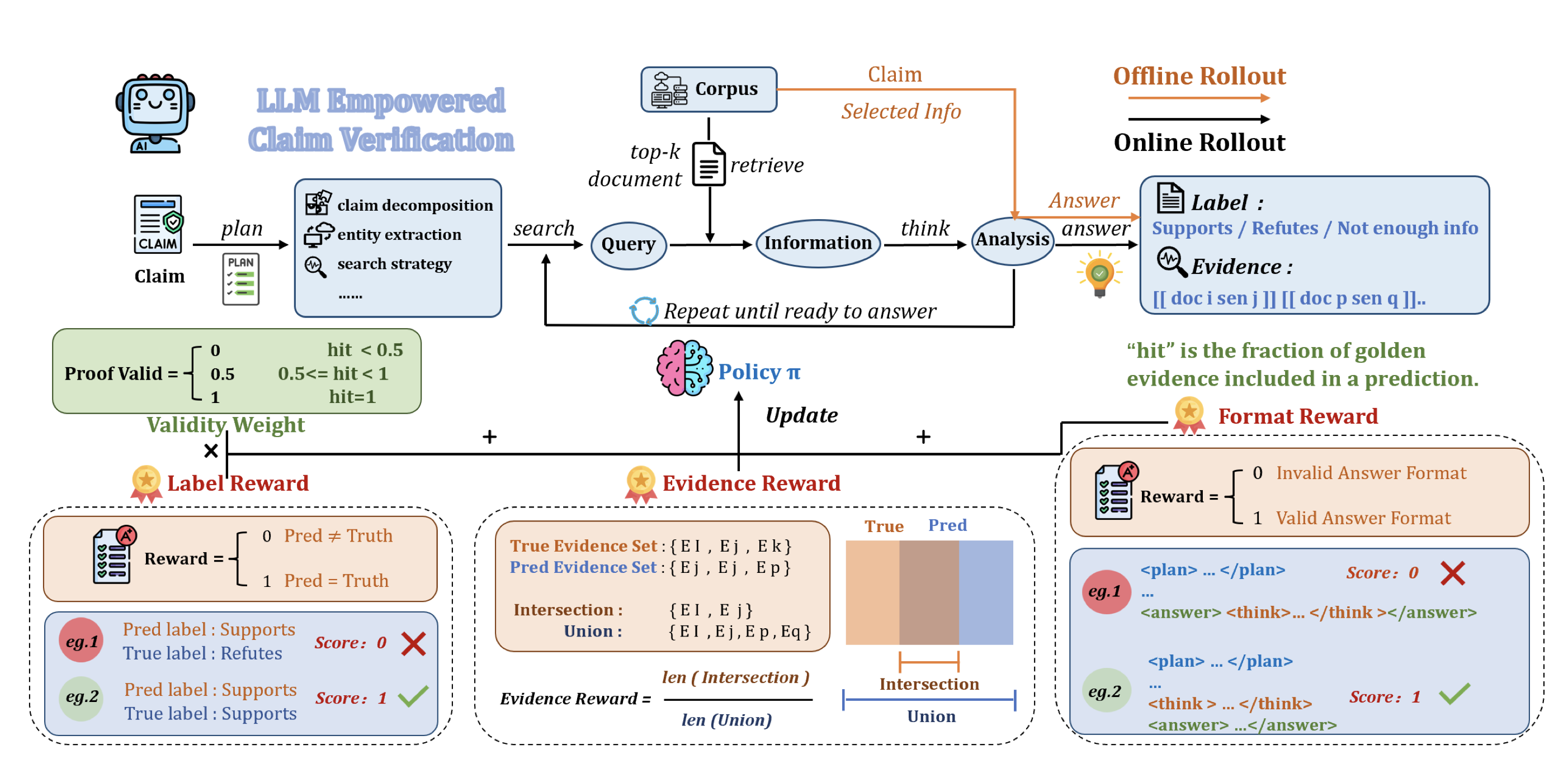

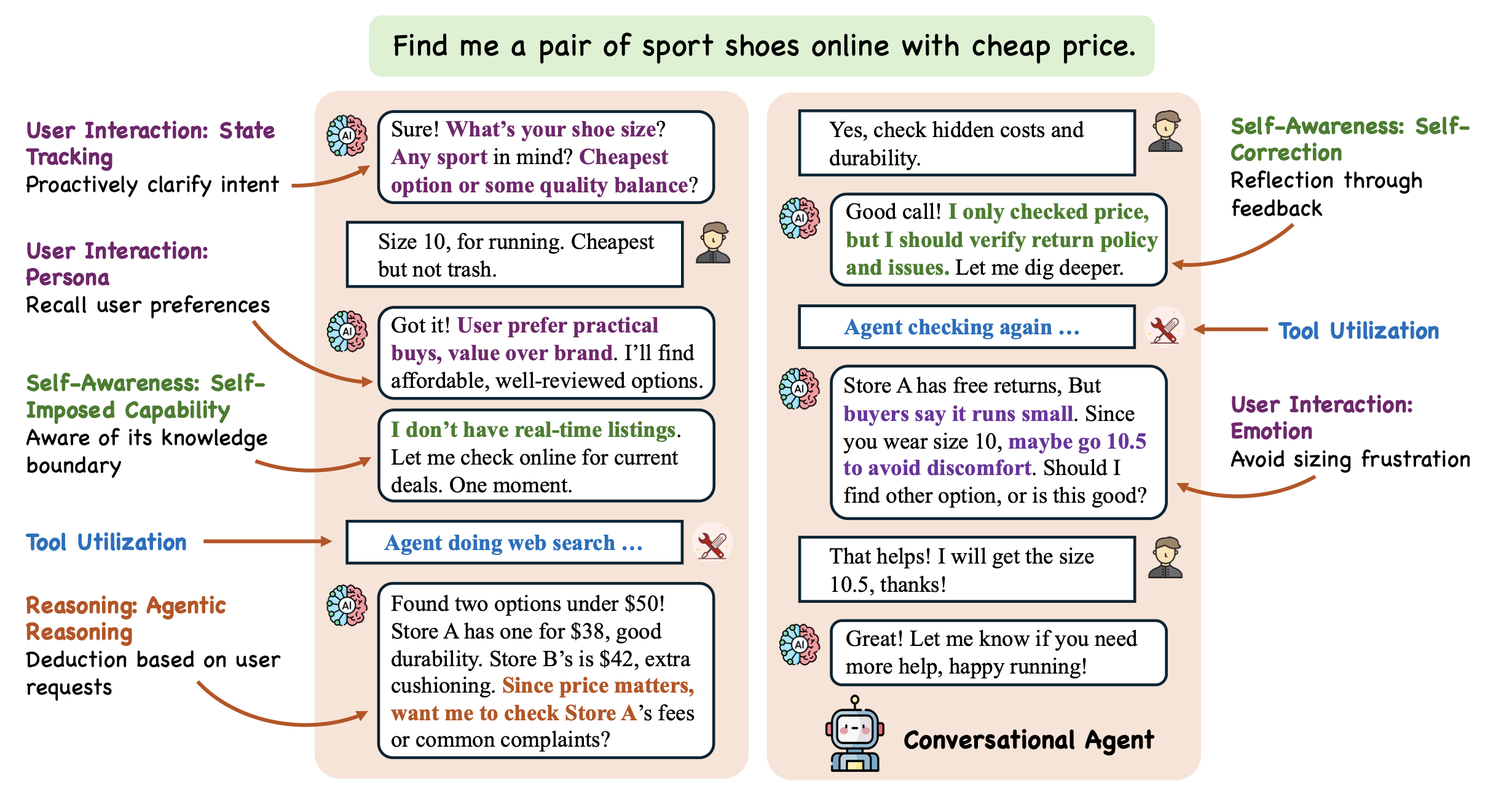

My research focuses on transforming large language models (LLMs) into truly autonomous agents that operate reliably in dynamic, ambiguous real-world settings. While today's LLMs excel at analytical reasoning when problems are well-formed and knowledge is encoded in their parameters, they struggle when tasks demand up-to-date information, adaptive behavior, or nuanced understanding of human intent. I envision agents that combine internal reasoning with external tool use (e.g., search, calculators, APIs) to pursue goals, incorporate feedback, and improve over time—moving beyond static models toward systems that learn continuously.

To guide this evolution, I propose the Lifelong Agent framework, which characterizes agentic intelligence as an iterative cycle of Learn, Align, and Evolve. Learn equips agents to acquire, organize, and apply knowledge for creativity and efficiency; Align adapts behavior to environment- and user-specific preferences, goals, and constraints; and Evolve drives sustained self-improvement via memory, reflection, and feedback across tasks and environments. My work integrates three tightly coupled threads at each phase—analysis (diagnosing agent behavior), training (designing methods that enhance capabilities), and application (deploying agents to solve real-world problems). This agenda aims to produce scalable, tool-using agents that continually adapt, remain aligned with human values, and deliver robust, practical impact.